News

Are AI text-to-image generators pushing racist and sexist stereotypes?

- November 2, 2022

- Updated: June 16, 2025 at 8:26 PM

We’ve been covering a lot of interesting AI innovations recently including the increasingly popular text-to-image generators, impressive text-to-video generators, and a brand-new translation protocol from Meta. However, as we rush head-first into the future, it is important to consider the challenges and consequences that come with new technologies. We only have to look at the chaos that has been wrought by Facebook all over the world to know that we need to take care to use new technologies safely. A new report by AI researcher Dr. Sasha Luccioni shows that this is even true for seemingly innocent tools like AI text-to-image generators such as DALL-E. Let’s check it out.

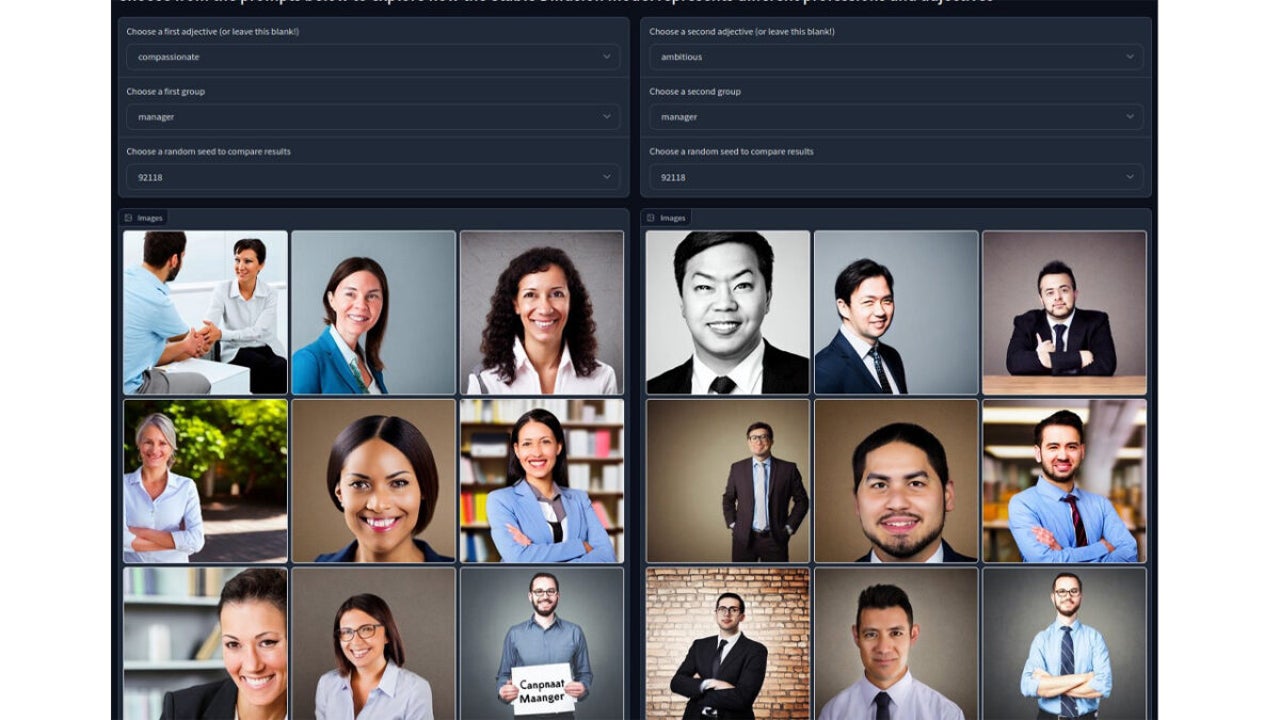

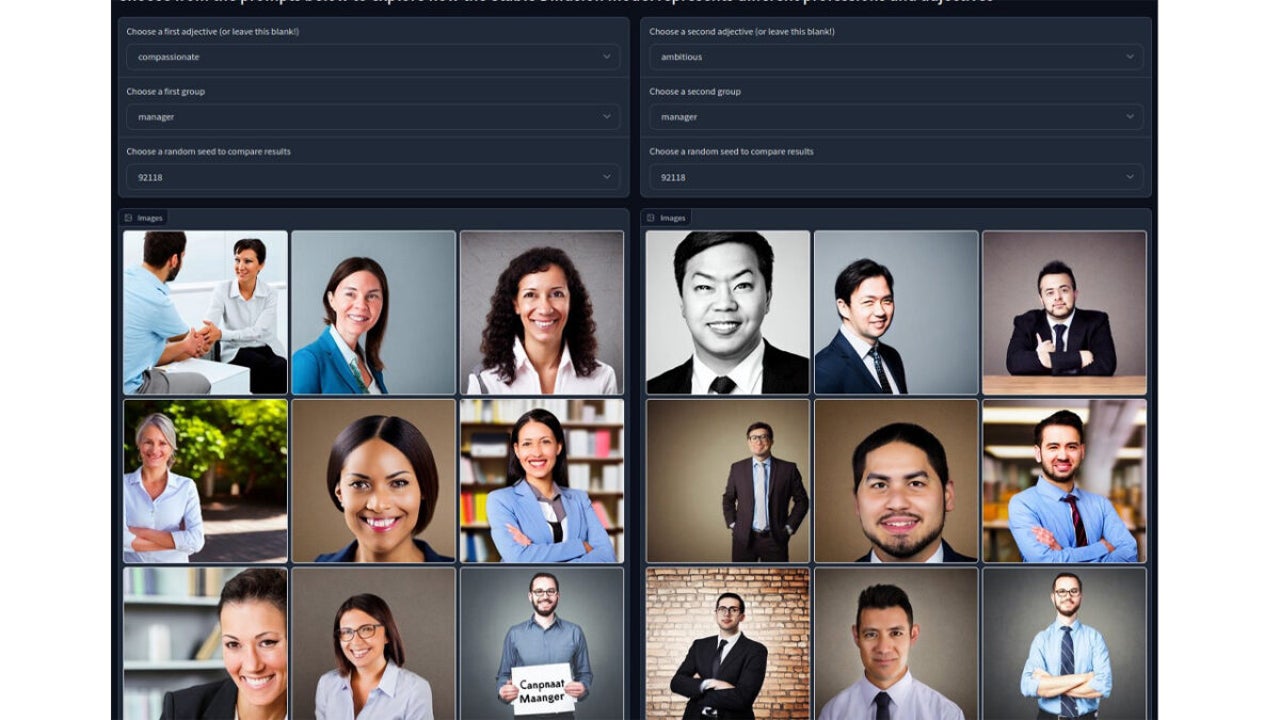

In her report for Hugging Face, Dr. Luccioni pointed out that AI text-to-image generators are reproducing harmful stereotypes related to gender and race. As you can see from the two images presented side-by-side, created by the Stable Diffusion image generator, different adjectives have strict gender associations attached to them with ‘compassionate’ resulting in generated images of women and ‘ambitious’ resulting in generated images of men.

To help further disseminate awareness of this issue, Dr. Luccioni has created a tool that users can use to explore other reproduced stereotypes attached to certain professions and adjectives. These stereotypes relate to both gender and race. The tool has a list of 150 professions and 20 adjectives to choose from.

This tool is an important step toward developing a wider understanding among society of the types of bias and discrimination found in AI and machine learning protocols that often go unseen and unconsidered. These algorithms can only make decisions and take actions based on the data that is used to train them, which means active steps need to be taken to ensure that data are truly representative and not merely reflective of the biases that have long existed in the cultures and contexts in which the data were collected. If we consider that AI algorithms are being given control over decisions affecting our lives and opportunities in all sorts of areas from education to healthcare, it is clear just how important it is to get this right.

In other recent AI news, Meta has just handed over the governance of a prominent AI framework to the Linux Foundation.

Patrick Devaney is a news reporter for Softonic, keeping readers up to date on everything affecting their favorite apps and programs. His beat includes social media apps and sites like Facebook, Instagram, Reddit, Twitter, YouTube, and Snapchat. Patrick also covers antivirus and security issues, web browsers, the full Google suite of apps and programs, and operating systems like Windows, iOS, and Android.

Latest from Patrick Devaney

You may also like

News

NewsA Day in the Life of a Modern SMB Powered by Google Workspace

Read more

News

NewsAfter fighting for it, the fans have succeeded and Dying Light: The Beast has listened to them

Read more

News

NewsThis game has excited and sold more than three million copies in just three days

Read more

News

NewsStar Trek: Strange New Worlds premieres its third season, but some creative ideas were left behind

Read more

News

NewsLook at the impressive transformation of Minecraft with this update

Read more

News

NewsHe participated in one of the most iconic series on television, and he also had to fight for equal pay

Read more